Work EXPERIENCE| internships | Projects

Kbench & Kgym - a benchmark and platform to test llms on linux kernel bug resolution

I worked with Chenxi Huang to establish the first benchmark and platform that tests LLMs on bug resolution in the Linux kernel. Through our experiments, we showed that LLMs have a large scope for improvement when resolving bugs in low-level and complicated software like kernel code.

COMEX - a TOOL TO GENERATE CUSTOMIZED SOURCE CODE REPRESENTATIONS

I worked with Debeshee Das, Noble Saji Mathews, and Srikanth Tamilselvam (Manager, IBM Research) on creating tools that generate customized source code representations for any generic code snippet (i.e. complete, incomplete, or uncompilable code). This capability is very useful when we wish to use static analysis on incomplete code being fed to an LLM.

Graph neural networks for the recommendation of candidate microservices

I worked with Srikanth Tamilselvam (Manager, IBM Research) on the Candidate Microservice Advisor project. In this research thread, we experimented with different techniques to represent application software as graphs, which we then partition into smaller sized groups using clustering mechanisms. To this end, I helped in translating this decomposition task as a constrained clustering problem over an embedding space learnt by a heterogeneous graph neural network.

Knowledge graph modelling for mainframe application modernization

I worked with Amith Singhee (Director, IBM Research) on creating research tools that simplify how we modernize legacy applications. I helped model legacy mainframe codebases as a very fine-grained knowledge graph (KG). Using this KG, we developed methods that allow application architects to make data driven decisions. Such informed decisions allow for a smooth incremental modernization journey of legacy codebases.

nETWORK TRAFFIC CLASSIFICATION AND ESTIMATING USER EXPERIENCE - UNSW, SYDNEY

I worked on the classification of encrypted network traffic and on estimating user experience in internet applications. The network domain proves to be much more challenging than language and vision because of infinitely many error-inducing factors like network congestion, different network architectures, and various bandwidth capacities. I was able to research and extract robust and reliable features that are immune to varying conditions, and that provide a clear signal for fast and accurate classification.

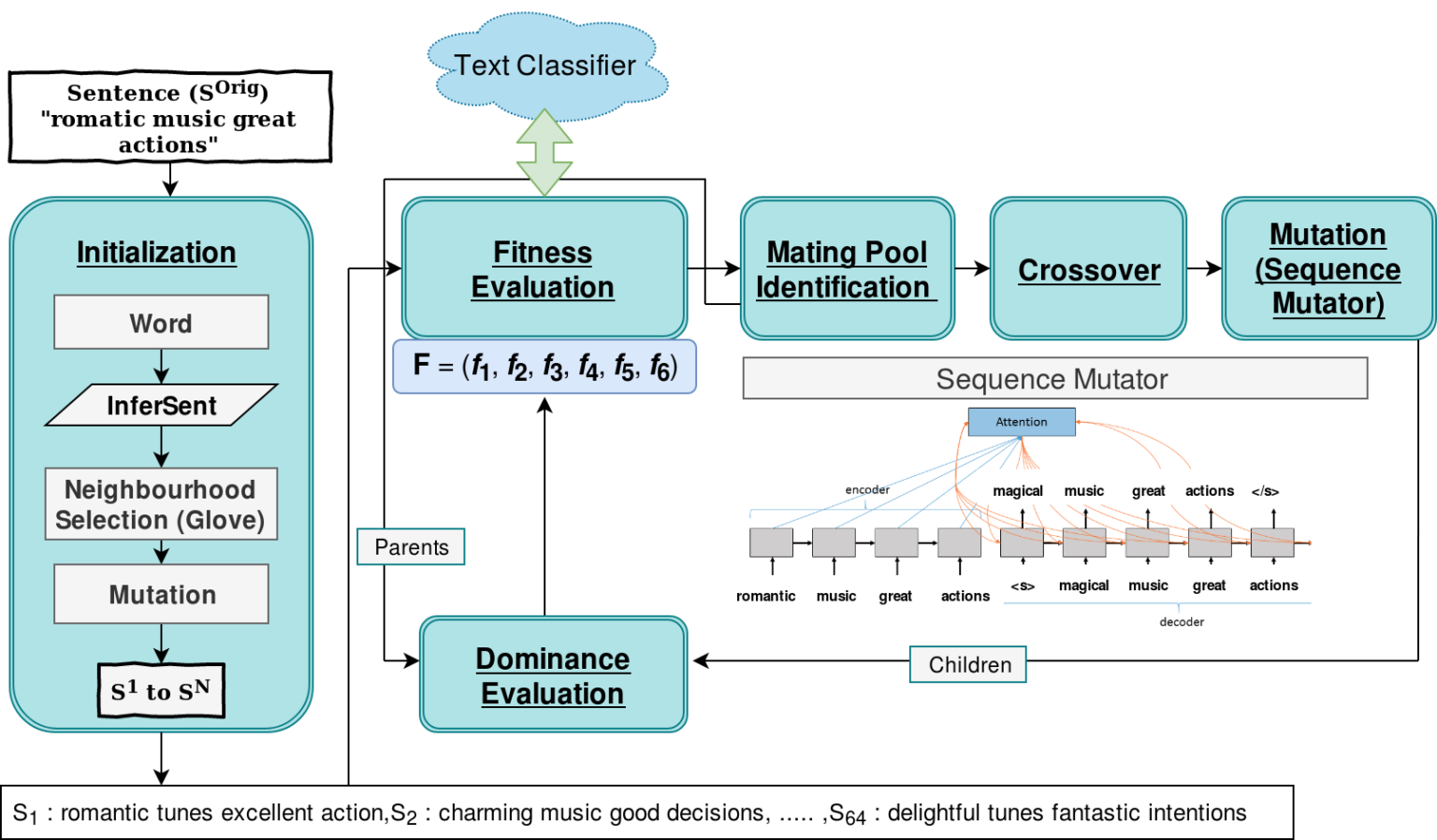

Adversarial Black-Box Attacks on text classifiers using genetic algorithms guided by deep networks - IBM RESEARCH AI

My work predominantly focused on studying the robustness of natural language models. We approached this problem by implementing a framework that fabricated inputs (adversarial attacks) for which deep learning models failed. These attacks were generated in a black box fashion using genetic algorithms guided by deep learning based objectives. By analyzing attacks for which the models failed, we were able to draw inferences about the underlying problems with the architecture. We were able to successfully fool popular natural language models like Word-LSTMs, Char-LSTMs, and Elmo LSTMs.

anomaly detection using generative adversarial neural networks - CEERI, PILANI

I helped in implementing unsupervised deep learning models for security and surveillance systems. We adopted Generative Adversarial Networks (GANs) for anomaly detection. By detecting anomalies (unusual events), the models were able to flag dangerous situations like cars or bikes riding on pedestrian paths. As a digression from the main objective, we also used GANs for predicting optical flows in videos. All of this research aims at solving a 3,00,000$ consulting project, and I was a part of the research team for one whole year.

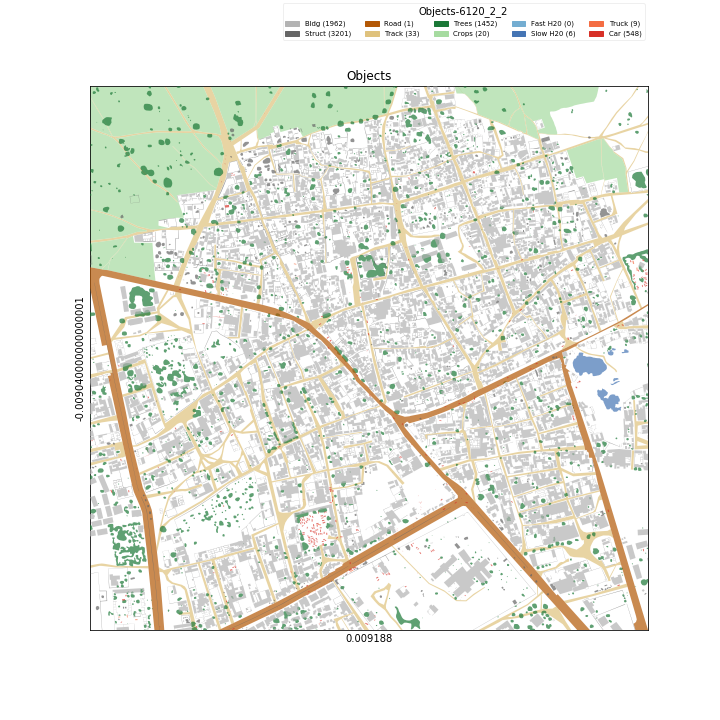

Multispectral satellite image segmentation using u-nets - ISRO, DEHRADUN

This project’s primary focus was at segmenting large scale satellite images for quick regional development surveys. Tarred roads and large water bodies were targeted and successfully extracted from high-resolution satellite images. After successfully processing satellite imagery using panchromatic sharpening and bi-cubic interpolation, we passed these images through a customized U-Net. After fine-tuning, the U-Net was able to segment tracks and water bodies with high Jaccard scores of 0.6 and 0.7 respectively.